Distributed Dragon Dictionary

Dragon provides the core capabilities needed to implement an efficient multi-node dictionary. With Dragon’s transparent multi-node support, the Distributed Dragon Dictionary delivers a powerful communication foundation that is dynamic and flexible enough for distributed services. Additionally, Dragon managed memory is capable of efficient shared memory partitioning, which satisfies many of the requirements for handling arbitrary data structures in a dictionary. The Distributed Dragon Dictionary uses these technologies along with namespace and process management services of Dragon to provide a highly productive and simple to use tool for communication among a set of distributed processes.

Architecture of Dragon Dictionay

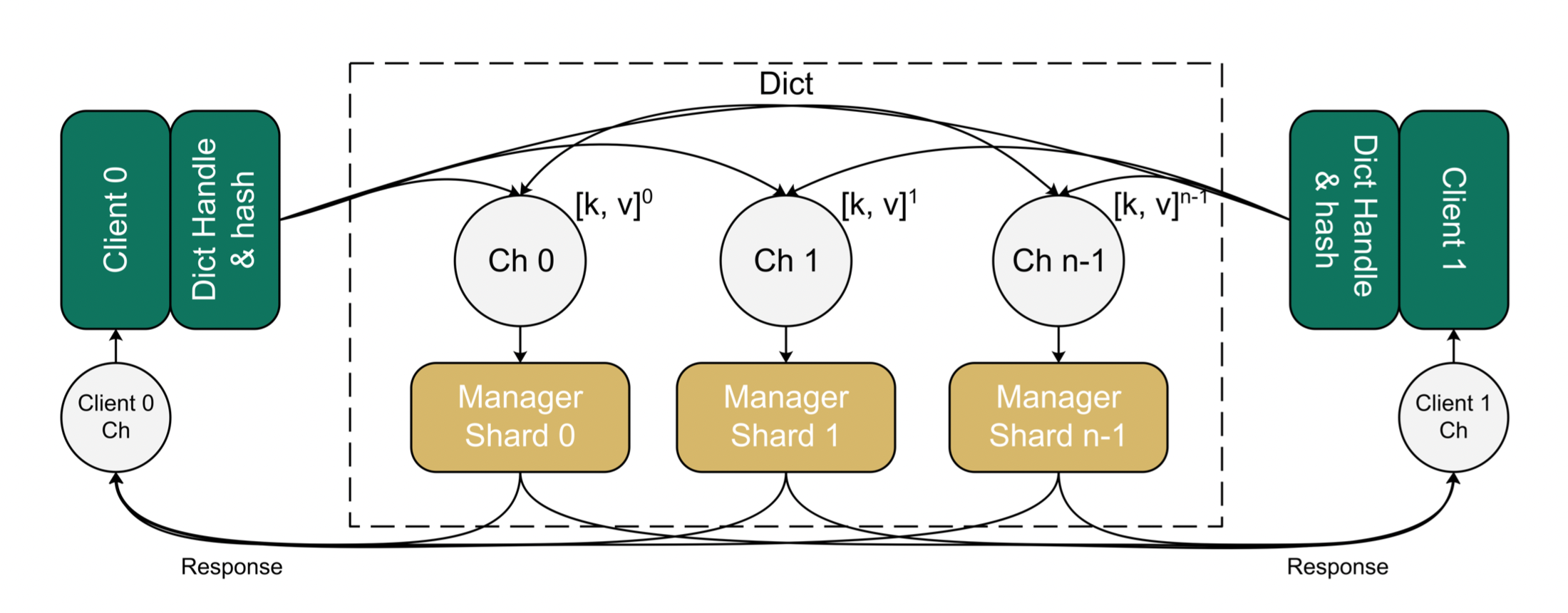

Fig. 15 High-level architecture of a Dragon Dictionary

From Python code, a user instantiates a dragon dictionary specifying the number of back-end managers. During bring-up of a dragon dictionary, a pool of manager processes are started along with a collection of dragon channels used for communication between clients and managers. Each of the managers supporting the dragon dictionary holds a shard of the distributed dictionary and has a dedicated input connection it monitors for operation requests, such as a put or get, coming from any client. The manager processes are also associated with a pool that stores the (key, value) pairs of the dictionary. Once initialized, a dragon dictionary can be shared with other processes. A common hash function translates a key to one of the manager channels. This translation occurs entirely local to a client process and allows the dictionary to efficiently scale.

Results on a multi-node setup

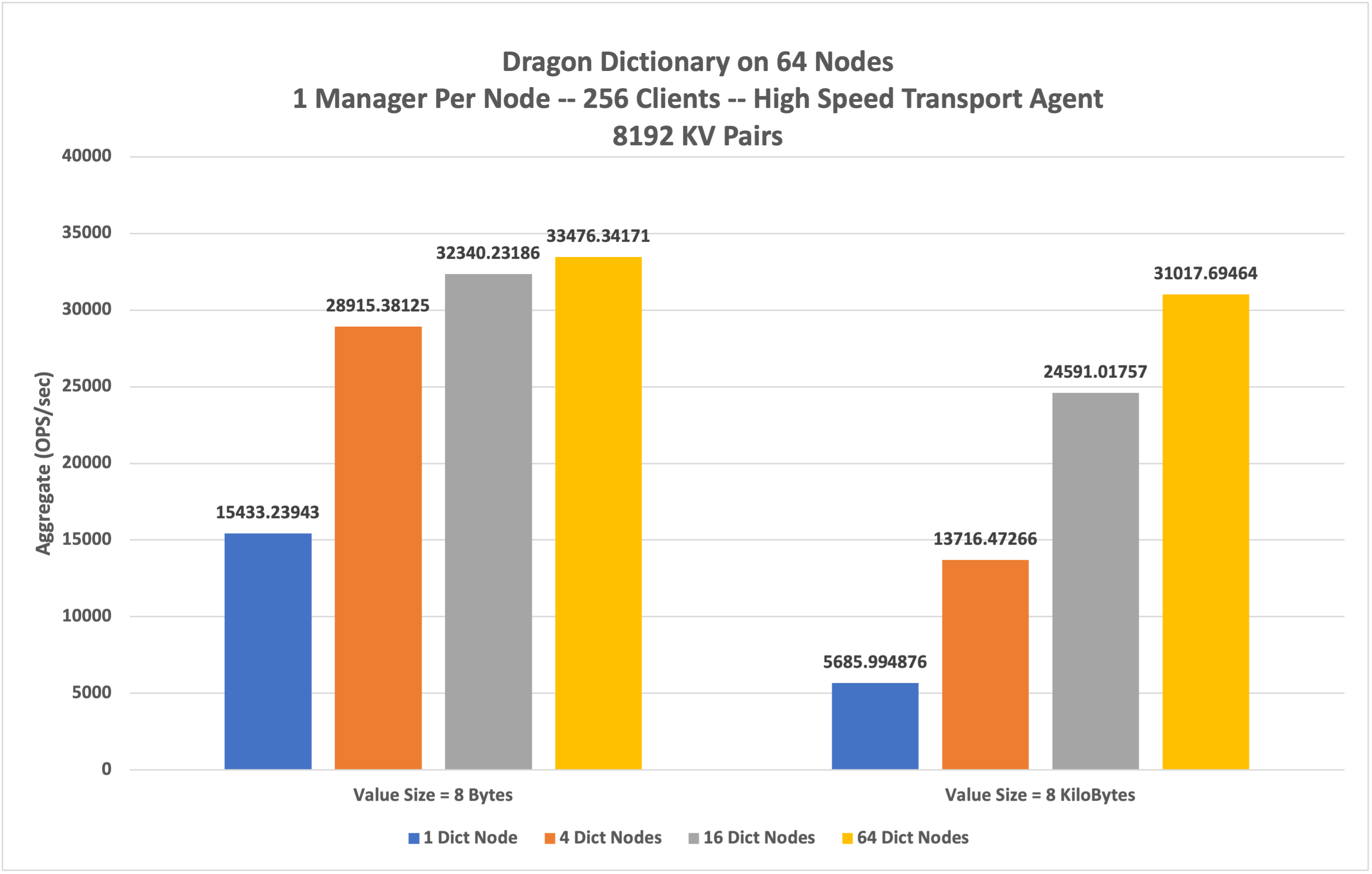

Below are the results of aggregated operations on a dragon dictionary with a 64 node setup and High Speed Transport Agent (HSTA). The results are collected with 256 distributed client processes performing the operations on dictionary in parallel with a total of 8192 kv pairs in the dictionary. The dictionary is spawned from across 1 node to 64 nodes with each manager worker per node. The value sizes are varied with 8 bytes and 8 kilobytes, with each key of constant size of 30 bytes in the dictionary. The results clearly demonstrate the advantage of distributed dictionary, with increased aggregated rate of opearations as the dictionary managers are spawned across the increasing number of nodes.

Fig. 16 Results on a multi-node setup